In the past few months, OpenAI released o1, Qwen released a reasoning model and the new kid in town is DeepSeek’s R1. We also have decided to release a reasoning model, based on LLaMa 405B and it is called J1. (I personally think we need to be a little more creative in naming reasoning models).

Anyway, this model is now available and in this article, we’re going to break it down for you.

The Importance of Reasoning Models

In the world of Large Language Models it is important to build models which are capable of imitation of the human behavior and reasoning is one of the most important aspects of the human behavior.

Personally believing that reasoning models are our keys to a lot of things and AGI and also Large World Models can be two of those things.

Currently we are working on J1 and it is working like a charm. It was an amazing moment for us to have this model working like this and never could imagine how great its outcome was.

Comparing with other models

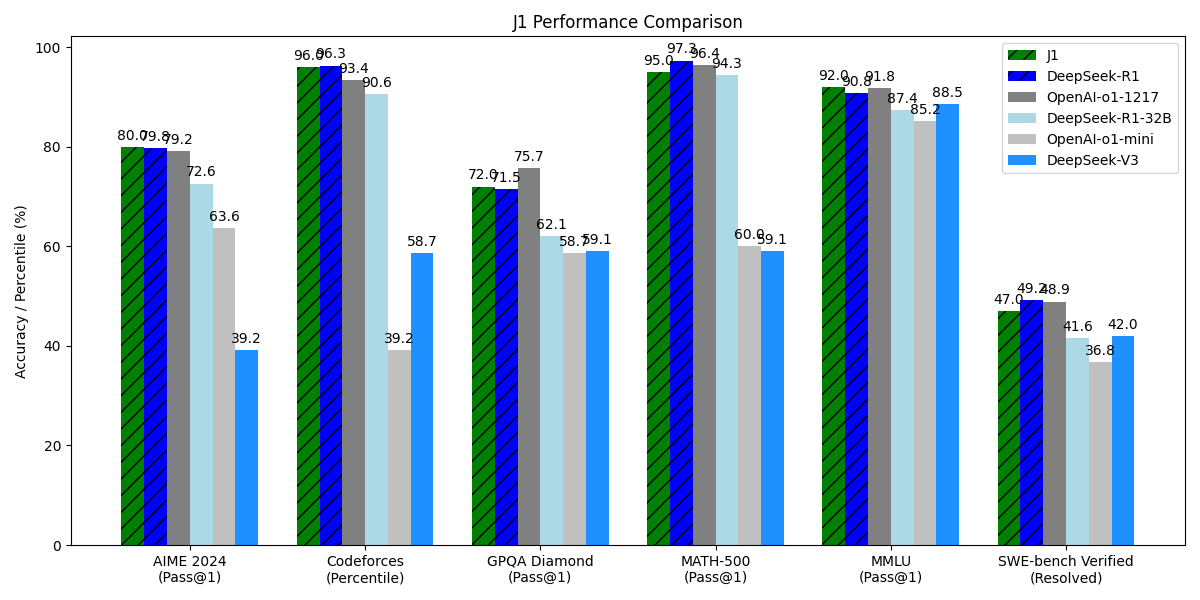

As you can see, J1 has a performance pretty identical to R1 and in some cases, it was even better than R1 as well. We’re working hard in order to make it better in near future as well.

Accessing the model

The model is totally free through our API and Chatbox.

Future Plans

Adding vision capability

Most of reasoning models aren’t capable of understanding images. Since jabir-400b has this capability and we’re going to build some tools using our already existing models, we work hard to add this one to our reasoning model as well.

The current version doesn’t support vision but in near future, it will. This is a strong promise.

Making it possible to be a local model

Since we made Choqok, which is a 1B model and can be run in pretty much any computer you can think of, we are in our way to make this model local as soon as possible.

Also we’ll be going to use this model on Mann-E‘s new content creation engine which we’ll be talking about very soon.

Conclusion

In the world we experience a new AI model pretty much in an hourly basis, as an AI company it is important to find new spaces to compete. J1 is our newest competition ground and we try to make it better and better in near future.

Also if you have any questions, you can freely message us at info@jabirproject.org. We also are open to investors and sponsors.

Regards